Counter-Strike code leaked: should you worry? What if your code leaks? Learn how to deliver software securely.

Wednesday, 22 April 2020.

The source code for the game Counter-Strike: GO has leaked today. I’m sad to see many concerned users decided not to play the game anymore, for now, worried about Remote Code Execution (RCE). Valve told them they’ve reviewed the code, and there’s no reason for users to worry.

Here’s I discuss what everyone should be doing about distributing software to the general public safely, and why I trust this statement:

We have reviewed the leaked code and believe it to be a reposting of a limited CS:GO engine code depot released to partners in late 2017, and originally leaked in 2018. From this review, we have not found any reason for players to be alarmed or avoid the current builds.

— CS2 (@CounterStrike) April 22, 2020

Source code for both CS:GO and TF2 dated 2017/2018 that was made available to Source engine licencees was leaked to the public today. pic.twitter.com/qWEQGbq9Y6

— SteamDB (@SteamDB) April 22, 2020

The reason why people are concerned is because bad practices such as security through obscurity or blindly trusting “secure servers” to invoke remote code are widespread in the software industry.

If you have an iPhone, you probably know applications are sandboxed. In essence, files of a given application aren’t blindly shared with another. You have some permissions settings and fine controls. Some people complain this makes the iOS operating system really closed, but this is actually one of its greatest strengths.

Who gets it right

Apple is definitely a leader when talking about respecting user security and privacy, especially in the smartphone ecosystem. It’s Differential Privacy approach leverages processing private user data on their own devices . Its Privacy-Preserving Contact Tracing protocol jointly developed with Google to fight coronavirus (COVID-19) has user privacy as a first-class citizen. It is no different when we talk about software distribution.

Application sandboxing appeared in iOS since its very beginning. Android is also moving towards this direction as users are getting more concerned over the topic.

FreeBSD and Linux distributions have a history of mirroring packages in public mirrors safely thanks to package signature. By the way, FreeBSD has the concept of jails, that allows you to isolate processes and what computer resources they can access. Something useful to mitigate attack vectors.

Google also has been doing great work bringing safer machines to the general public with its Chrome OS too.

Traditional computers

However, the situation for “general” computers is not good, with sandboxing still flourishing. It is an essential responsibility of any company that develops and publishes software for the public at large to enforce boundaries and limits to deal with their practically unrestricted trust and risks.

Browsers are, perhaps, the only understood example of a successful sandbox on traditional computers.

Code signing

Code signing is a technique used to confirm software authorship and to provide an indication of provenance, guaranteeing that a program has not been tampered or corrupted.

If you sign your software, you can:

- Distribute your software over unsecured channels

- Guarantee that the end-user of your software get what was distributed

- Make your builds auditable if reproducible

If you don’t, you must have control over all the chain of custody of your application and its communication from the distribution channel to the end-user to assure its security.

Notarization

Notarization is a process of sending your application to Apple so it can sign that your software is free from malware or malicious components. You can automate this process on your delivery pipeline, so there is no reason why not to use it if you distribute applications to end-users.

It starts from the firmware

Jessie Frazelle from the Oxide Computer Company has a great article about Why open source firmware is important for security.

curl | sh: shame on you! and me!

If you use any Unix-like computer and are reading this post, there is a high chance you installed something with:

$ curl http://example.com/unsafe | sh

And maybe you even created something like this! I’m guilty of taking this shortcut myself.

There are just too many reasons why this is, overall, a bad idea.

Why even bother signing?

If you don’t sign and your server is compromised, your users are at risk, and this is unfair.

You don’t want to let your users down by exposing or destroying their data, and you don’t want to face legal consequences os lose their trust.

This risk can only be partly mitigated by using HTTPS. However, this lacks a defense in depth or Castle Approach. You want to minimize your attack surface.

Why even bother code notarizing?

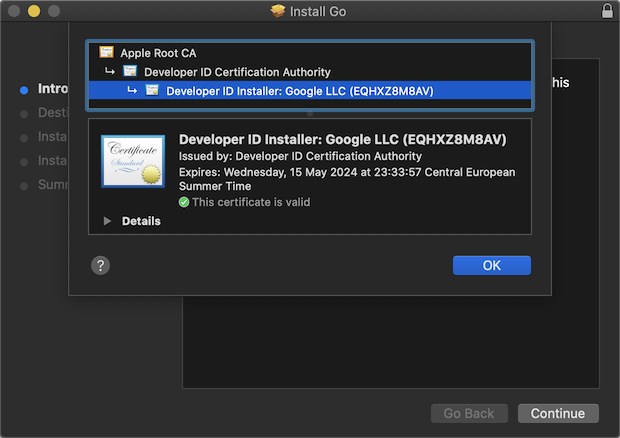

If you notarize your application, your Apple users will likely trust you more and have a smoother user experience when installing your application.

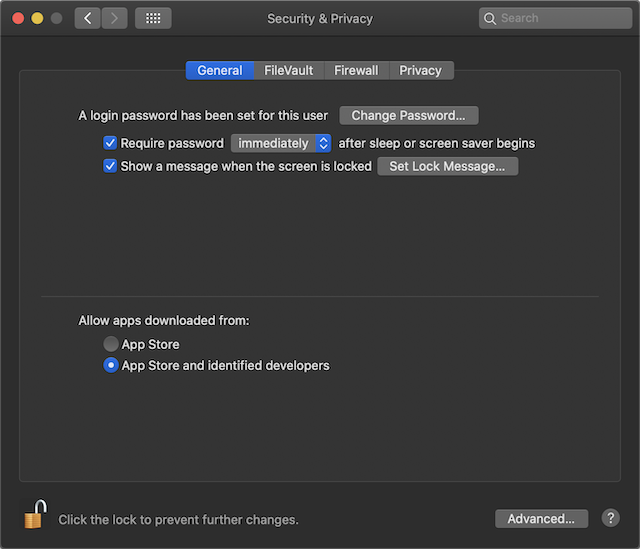

Apple’s Gatekeeper

Apple tightened up software installation making the operating system more likely to stop users from executing untrusted code. Some people complain that they are closing down the platform and that this is a bad thing, and I must disagree. For me, they are trying to make macOS as safe to use as iOS.

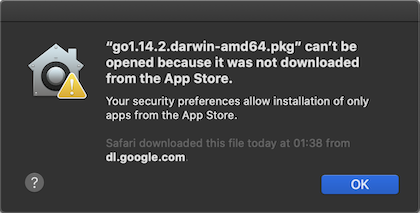

This is what you see if you only allow downloading from the App Store and try to install something from outside:

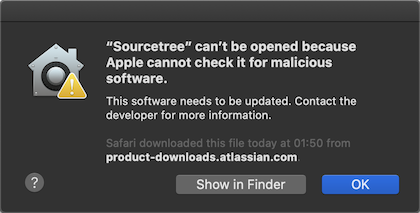

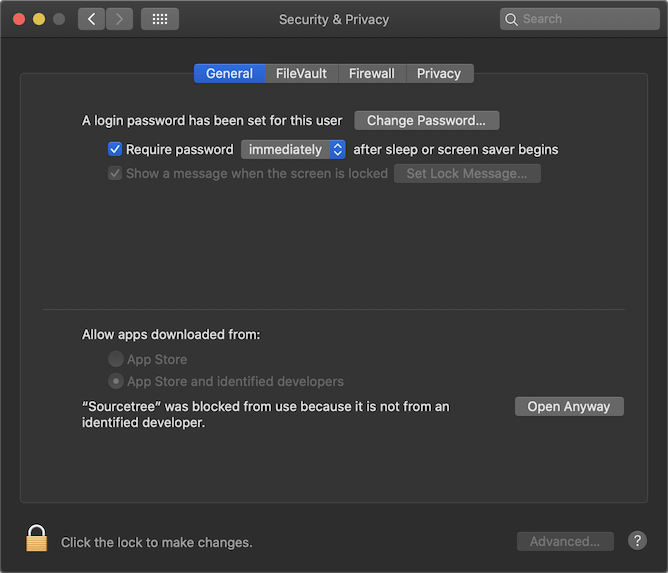

This is what happens if you try to install an application that wasn’t product signed now:

You can quickly get around installing apps from outside the App Store that are product signed:

However, things look slightly more hostile to applications that aren’t product signed:

Atlassian evidently forgot to request an Apple certificate to sign SourceTree.

Writing an application for macOS

Please notice that Apple has two programs with similar names:

- codesign is for signing code and the like

- productsign is for signing packages and the like

For this subject, productsign is what you need.

Please don’t do the curl | sh workaround if you have a public user base even though I did it in the past. You can do better than me.

Tip: verify macOS .pkg installers with the native installer program or with Suspicious Package.

Further reading list

- Code signing

- About Code Signing

- How to sign your Mac OS X App for Gatekeeper

- Panic: About Gatekeeper

- How to use the Apple Product Security PGP Key

- How To Sign macOS PKGs for Deployment with MDM

Writing an application for Windows

For Windows, you can request a Windows Code Signing certificate from multiple Certificate Authorities (CAs). Make sure you don’t make the mistake of getting a certificate for a domain, though. Both rely on Public-key infrastructure, but are not compatible with each other.

DigiCert is a reliable partner. I used it in the past. I recommend you get a certificate for the maximum amount of time possible, especially if you don’t use Windows regularly.

By the way, if you don’t codesign, your users might get weird security risk message by Windows antiviruses.

Microsoft documentation

Time-stamping the signature with a remote server is recommended.

Time-stamping was designed to circumvent the trust warning that will appear in the case of an expired certificate. In effect, time-stamping extends the code trust beyond the validity period of a certificate. In the event that a certificate has to be revoked due to a compromise, a specific date and time of the compromising event will become part of the revocation record. In this case, time-stamping helps establish whether the code was signed before or after the certificate was compromised.

Source: Wikipedia article on code signing.

Updating your application

Your updates should be safe too. You should not directly download a binary from a server and replace your file without validation.

Your application must validate if the downloaded files are legit before installing them.

With the Go programming language, I used equinox.io for managing my updates safely and successfully in the past, and I recommend it. However, you don’t need any 3rd party provider to do this.

The essentials are:

- You must have a secure device to generate releases and sign them.

- You should keep your private keys safe.

When I was working on releasing a CLI used by hundreds of users, I used to connect to a remote server via SSH. It was protected with public-key infrastructure through my SSH key + password + one-time password in a secure physical location. Access to both the physical location (okay, I admit! Amazon Web Services datacenters!) and to the server was on a need-to basis.

I’d SSH to it whenever a release was ready, and do it from there, to avoid exposing the private key.

Things I slacked on doing but would be nice to have:

- Verification for revoked keys

- Kill switch to stop users from downgrading/updating the application to unsafe versions, if I ever released something with a security bug

- A second key pair to serve as a backup if the first leaked

- Friendly key rotation (had no rotation)

Things I would do if more users were using it and the perceived risk was greater:

Multiple keys so multiple people could validate an update before they would be allowed to be used by users. I know Apple uses something like this to roll their operating system updates, but I couldn’t find a reference. If you know, please let me know to update here.

Chimera: auto update + run command

Equinox didn’t allow me to code sign a package, so I had to find a way around it.

I created what I called a chimera: a binary for my program that seems to be a regular but is actually…

An installer that will automatically download and install a new version, replacing itself, and executing the new command from inside, passing environment variables, on the same current working directory, and piping the standard input, output, and error, and exiting with the very same error code.

Here is the implementation, if you are curious.

The good thing about it was that I was free of having to use Windows to release a new code signed version each time I decided to do it.

GitHub’s official command line tool (gh; but not related to the NodeGH I used to maintain a long time ago!) does the code signing on every release. If you want it, take a look at their code.

Summary

If you are a Valve consumer, I’d say you can trust their words and expect nothing bad is going to happen. While many people resort to obfuscation when working on closed-source software, I’d say Valve probably doesn’t or they would already be screwed. In theory, issues might exist and get discovered by bad actors easier but I wouldn’t worry about it either.

If you’re worried about this, you might consider to buy a dedicated gaming machine to mitigate the risks, and this is a good measure if you can afford.

If you are a software developer and found this post useful, please share your experiences and ideas too!

Tweet